Behold, a giant AI-generated rat dick

Earlier this week, scientific journal Frontiers in Cell and Developmental Biology published a paper entitled “Cellular functions of spermatogonial stem cells in relation to JAK/STAT signaling pathway.” In it, three researchers from Xi’an Honghui Hospital and Xi’an Jiaotong University aimed to summarise current research on sperm stem cells.

They also showed off an absolutely enormous, wildly anatomically incorrect, AI-generated rat dick.

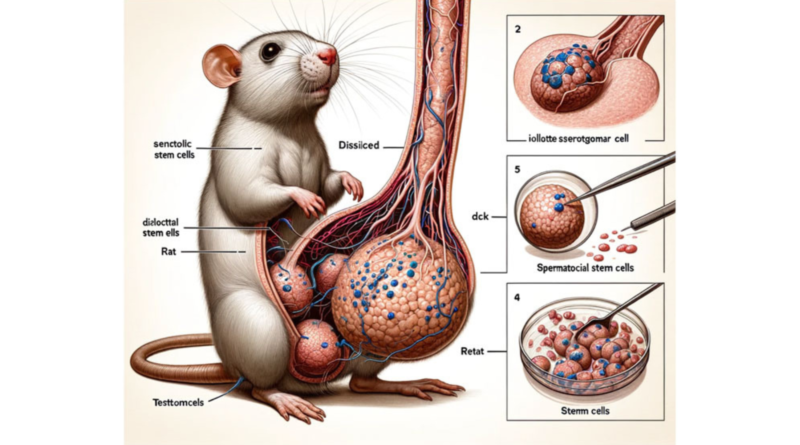

The article featured three apparently illustrative images, all of which were created by AI art generator Midjourney, and all of which were blatantly incorrect. The most obvious errors concerned the Rodent of Unusual Size depicted in the article’s first figure. This illustration ostensibly showed “spermatogonial stem cells, isolated, purified and cultured from rat testes.” Though not something the average person necessarily wants to see, it would at least make sense in the context of the paper.

Unfortunately, what the picture actually showed was a white rat standing on its hind legs and gazing reverently up at its gargantuan front tail. Reaching for the heavens, said magnum dong was cut into a cross section to display a truly nonsensical collection of unidentifiable organs. This included four round structures that might have been intended to represent gonads, but were far from the normal size or position for such glands. Though rat testicles may be quite large, they typically aren’t over twice the size of the animal’s head unless something is horribly wrong.

I am not a rodent penis connoisseur. I doubt I would be able to correctly distinguish a rat wang from a chipmunk’s if the appendages were presented to me in some sort of horrific tiny peen lineup. I do know, however, that mammalian schlongs don’t tend to grow larger than the creature they are attached to.

The fact that rats aren’t running around dragging giant meat swords along the ground was already a fair giveaway that something was rotten with the state of the diagram. But even if you’d never seen a rat before in your life — and considered it plausible that they straddle their salami like a hobby horse — the image’s absurd labelling would be enough to tip you off. I’m fairly sure that there is no such thing as “testtomcels,” “diƨlocttal stem ells,” or a “iollotte sserotgomar cell.”

The article’s subsequent two images were better in that they didn’t illustrate a monster anaconda large enough to swallow its owner. Unfortunately, they weren’t much improved in terms of usefulness, accuracy, or just not being complete nonsense. Like the first diagram, each bore meaningless labels masquerading as useful information, looking just authoritative enough that someone might accept them at a glance.

It isn’t clear exactly how these diagrams made it all the way to publication without being picked up. The article was edited by a member of Frontiers‘ editorial team as well as reviewed by two other parties, which means at least six people gave it their approval. In a statement to Motherboard, one of the reviewers said that he had only assessed the paper for its scientific aspects, and that it was not his responsibility to check the accuracy of the AI-generated images.

Though Frontiers hasn’t explicitly said “sorry for the scary rat dick,” it released a statement on Tuesday saying that it is aware of “concerns” regarding the article and is investigating the matter. The paper has since been redacted, and the names of the editor, reviewers, and one of the three authors removed.

“An investigation is currently being conducted and this notice will be updated accordingly after the investigation concludes,” wrote Frontiers.

This isn’t the first time inappropriate reliance on generative AI has produced embarrassing professional consequences. Last year two lawyers were fined for citing non-existent cases after they used OpenAI‘s ChatGPT to prepare their legal filings. A third lawyer landed in trouble for citing fake cases generated by his client using Google Bard.

It’s a handy reminder not to put too much trust in AI for anything important, and perhaps consider an artist the next time you need an anatomically accurate ratwurst.