Most users on X never see Community Notes correcting misinformation

When Elon Musk took over X, formerly known as Twitter, one of his first moves was to overhaul the company’s content moderation policies. When it comes to misinformation, Musk depends on X’s users to deal with the problem in the form of Community Notes, a program that allows approved users to add context to posts that contain inaccuracies or falsehoods.

Unfortunately, for Musk and company, this crowdsourced solution doesn’t appear to be working. And the influx of misinformation since Hamas attacked Israel on Oct. 7, and Israel subsequently began bombing Gaza, has only made matters worse.

X punts content moderation to Community Notes

X’s misinformation problem during the past few months has been so prominent that the EU threatened to take action against the company, launching an investigation that could result in X facing hefty fines.

Last month, X announced several overhauls to Community Notes in an attempt to placate EU officials. X CEO Linda Yaccarino published two dozen non-reply, non-retweet posts in October. Nine of those posts were about updates to the Community Notes program. That same month, X executive Joe Benarroch reached out to Mashable to provide a press release concerning the Community Notes updates, marking the first time Mashable heard from X since Elon Musk acquired the company last year.

X has made it clear in all of the above that it depends on Community Notes to fight misinformation. But, Community Notes is failing.

According to a report from NBC News last month, and a recent report Bloomberg last week, X often failed to show Community Notes fact-checks on viral posts spreading falsehoods in a timely manner. The amount of time it takes for a Community Note to appear on a post was a central piece to X’s updates to the program. Yet, Bloomberg found that Community Notes take more than seven hours to show up on average, with some misinformation-spreading posts going days before appearing with a fact-check.

For the past two months, Mashable has also been monitoring Community Notes, tracking 50 viral posts that had an approved Community Note attached. Our goal, however, was to find out how many X users are seeing a Community Note after it is approved and the platform actually affixes the note to a post, making it publicly visible to X’s entire user base.

Mashable found that X is failing to get Community Notes in front of its users — even after the fact-check has been approved by the community.

Misinformation receives way more views than fact-checks

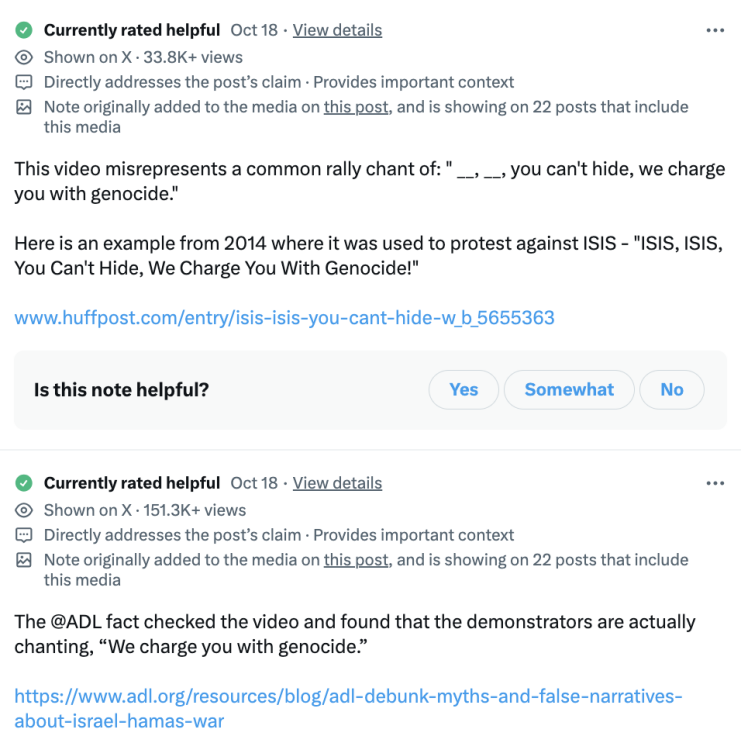

On the night of Oct. 17, X user @MichalSabra posted a video claiming to depict a chant from pro-Palestine students protesting at UPenn consisting of the phrase “We want Jewish genocide.”

However, users who watched the video could hear that this was false. The protesters were chanting “We charge you with genocide,” an accusatory slogan. The ADL also confirmed this in its own debunk of the claim.

When Mashable first started tracking the post, it had already received more than half a million views in 12 hours. Nearly 20 hours later, the first of two Community Notes that would later go on to be approved were submitted to be rated, meaning they were only visible to members of the Community Notes program — not the broader platform user base.

On Oct. 19, two days after @MichalSabra posted the falsehood, Mashable received a notification from X saying that the Community Note had been approved on the post and had been seen 100,000 times.

As of Nov. 28, the @MichalSabra post has not been deleted. It has amassed 3.9 million views. The two Community Notes that have been approved to show on the post only have a total of 185,000 views. The Community Note has only been seen by 4.7 percent of the users who viewed the original falsehood.

In addition, according to X, these two Community Notes also appear on 22 other posts containing @MichalSabra’s video and Community Notes include views from those posts in its cumulative metrics as well.

Disappearing Community Notes

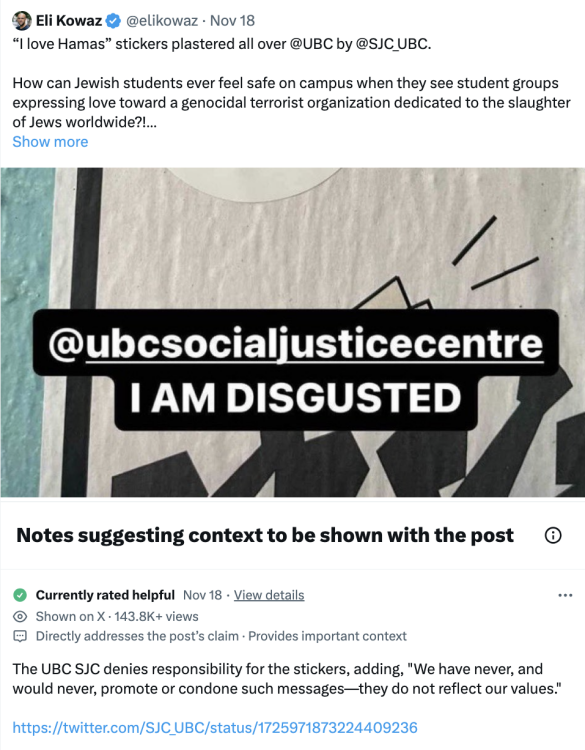

X user @elikowaz published a post on Nov. 18 depicting an “I heart Hamas” that allegedly was printed and posted by the University of British Columbia’s Social Justice Centre on the college’s campus.

Within hours of the post, the UBC Social Justice Centre denied the allegations. Hillel BC later shared that a third-party associated with the Jewish college campus organization was in fact responsible for the stickers.

When Mashable first started monitoring this post on Nov. 20, it had more than 600,000 views. The approved Community Note containing the fact-check had just over 25,000 views.

As of Nov. 28, @elikowaz’s post remains, now with one million views. However, Mashable noticed that even after the Community Note was approved, the original post containing the misinformation continued to amass more views than the fact-check.

In one week, @elikowaz’s post with the Community Note attached gained around 400,000 more views. This means that if Community Notes worked as intended, the fact-check would have around 425,000 views — or just under half of the views on the post it is attached to.

Instead, the Community Note only has 143,000 views.

What happened? It appears the Community Note did not always appear affixed to the post even after the note was approved. Mashable noticed this same issue with other posts it was monitoring. Sometimes, a note would lose its approval and be relegated back to rating status, meaning only Community Notes program members could see the post. Other times, the note would disappear altogether and no longer be viewable on a post even by members of the Community Notes program.

X’s own Community Notes metrics raise questions

X has boasted about the number of views that Community Notes have received on the platform multiple times. On Oct. 14, X stated that Community Notes were “generating north of 85 million impressions in the last week” after Hamas’ Oct. 7 attack. Ten days later, in a press release sent to Mashable, X said that Community Notes “were seen well over 100 million times” over the past two weeks. Then on Nov. 14, X published a blog post claiming “notes have been viewed well over a hundred million times” in the “first month of the conflict.”

By X’s own metrics, unless traffic to the platform and the number of posts being made are also falling, it appears views on Community Notes are on a downward trend.

Furthermore, based on the virality of many of the posts spreading misinformation Mashable looked at, 100 million views on the totality of Community Notes is an extremely small number.

For example, on Nov. 9, X user @Partisangirl posted a video claiming it showed Israeli helicopters opening fire on its own citizens at the Supernova music festival on Oct. 7. The claim was debunked as the video showed Israeli helicopters attacking Hamas at a separate location.

However, @Partisangirl’s post with the false claim garnered a whopping 30 million views as of Nov. 29. That’s one single 20-day-old X post with roughly 30 percent of the views that the entirety of Community Notes garnered in one month.

A Community Note was eventually placed on @Partisangirl’s post. The note only has around 244,200 views or roughly 0.8 percent of the the views that the original post received. The misinformation was viewed 123 times more than the fact-check.

Community Notes not being seen by most users

While the post from @Partisangirl is one of the more extreme examples, Mashable found that in most cases we viewed, there was a large disparity between the number of views on a post and the number of views on the Community Note attached to the same post.

One Oct. 19 post from regular disinformation spreader X user @DrLoupis attributed a false statement to Turkey president Erdoğan saying the country would intervene in Gaza. The @DrLoupis post containing the fake quote was viewed nine million times as of Nov. 29. Two separate Community Notes were submitted just a few hours after @DrLoupis published the post and were later approved. These two notes combined only have around 740,000 views.

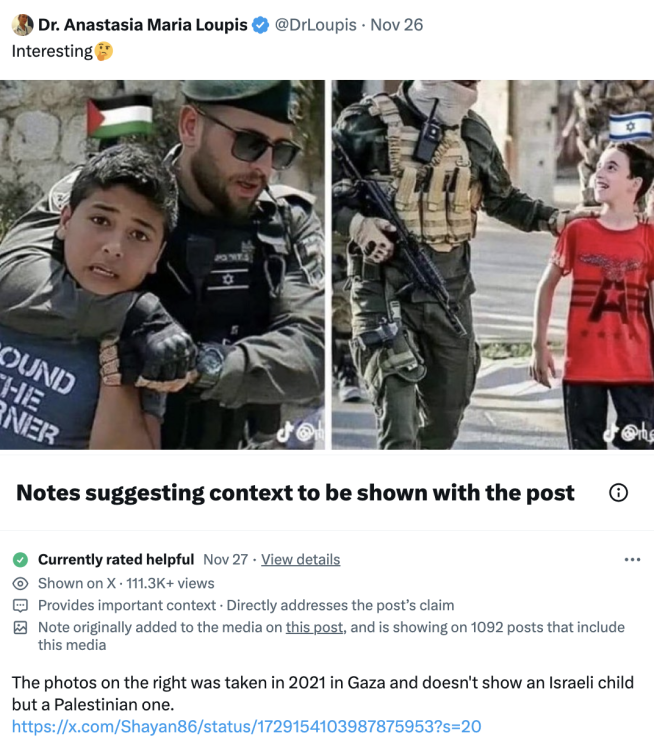

In another @DrLoupis post published on Nov. 26, the user falsely depicts a photo of a child in Gaza as an Israeli boy. In three days, this false post racked up 6.3 million views. The approved Community Note wouldn’t be submitted until nearly 27 hours later. Compared to the 6.3 million views the post has, the attached Community Note only has just over 111,000 views. The misinformation has 57 times more views than the fact-check.

Another major disinformation spreader on X, @dom_lucre, published a post on Oct. 23 claiming U.S. troops were attacked in Syria with an image depicting an explosion. The attachment was actually an edited picture depicting a 2018 Israeli airstrike in Gaza. X user @dom_lucre’s misinformation received nearly 800,000 views or around 10 times more views than the Community Note fact-check, which only received 82,400 views.

Verified users are big misinformation spreaders

Every account mentioned in this piece so far – @MichalSabra, @elikowaz, @Partisangirl, @DrLoupis, and @dom_lucre – is a subscriber of X’s paid verification service X Premium, formerly known as Twitter Blue.

Mashable did not set out to target these users. The posts we tracked were among some of the most viral posted on the platform. Some were discovered through X’s search feature. Others were found via X’s official @HelpfulNotes account, which shares posts that receive a Community Note. However, X gives X Premium subscribers and algorithmic boost, which in turns helps their posts permeate throughout the platform.

For example, X user @jacksonhinklle has become one of the most influential figures on the platform since Oct. 7, gaining millions of followers in just a few weeks. He’s also an X Premium subscriber and regular disseminator of disinformation.

On Nov. 8, @jacksonhinklle posted that Israeli sniper Barib Yariel was killed by Hamas. The post received 6.4 million views. However, Barib Yariel does not exist. Four seperate Community Notes were approved on the post, with the first being submitted 10 hours after @jacksonhinklle’s post. All four Community Notes combined received around 639,300 views or less than 10 percent of the views @jacksonhinklle’s post received.

Many X Premium subscribers, including a number of the individuals mentioned in this report, are monetized on the platform. This means that Musk’s company pays these individuals based on the number of other subscribers who view ads placed on their posts. However, on Oct. 30, Musk announced that the policy was changing and posts that received a Community Note were no longer eligible for the ad revenue share program. However, as Newsguard discovered in an analysis conducted weeks after Musk’s announcement, advertisements for major brands like Microsoft, Pizza Hut, and Airbnb were still appearing on posts containing misinformation about Israel and Palestine.

While this investigation mainly focused on misinformation regarding Israel and Gaza, Mashable did monitor other posts and found the same problems across posts about various issues and topics. For example, verified X user @LaurenWitzkeDE posted a video of a “lab grown drumstick” moving around and twitching on a table. @LaurenWitzkeDE claimed this was an example of “Bill Gates’ lab-grown mystery meat.” However, the video did not depict a real piece of chicken and was actually an art piece created by an artist on TikTok. The original @LaurenWitzkeDE post received 1.6 million views. The Community Note with the fact-check only garnered just over 203,000 views.

Mashable monitored 50 posts over the past two months, we excluded a few from our investigation after the Community Note was removed or disappeared from the post. Only three posts we tracked had Community Notes with around half as many views as the post containing the falsehood – two of which contained the same manipulated media.

Many times, misinformation on X spreads without any Community Note. Or in another common scenario, a Community Note is approved, but then later removed from the post. Of the posts that do receive a Community Note, and that note remains affixed to the post, the falsehood in the post is often viewed around 5 to 10 times more than the fact-check. And sometimes, as noted in the examples mentioned, that disparity is even larger.