Should we trust Amazon’s AI-generated review summaries?

Lately, users have noticed an influx of AI-generated summaries of product reviews on Amazon. Can they be trusted?

Amazon products often have hundreds and thousands of reviews, and it can be tedious and time-consuming to sift through all this feedback. But shopping on Amazon for a laptop stand, for example, often requires sifting through many options on the site that vary drastically in quality, a workload more appropriate for investing in a car than for buying a quotidian household item. Last August, Amazon announced a solution to review fatigue: an AI-generated summary that succinctly highlights the pros and cons from customers.

Theoretically, the feature is a useful tool that helps consumers quickly decide what products to buy. But the appearance of these summaries underscores the pitfalls of relying on generative AI: inaccuracy and misleading information.

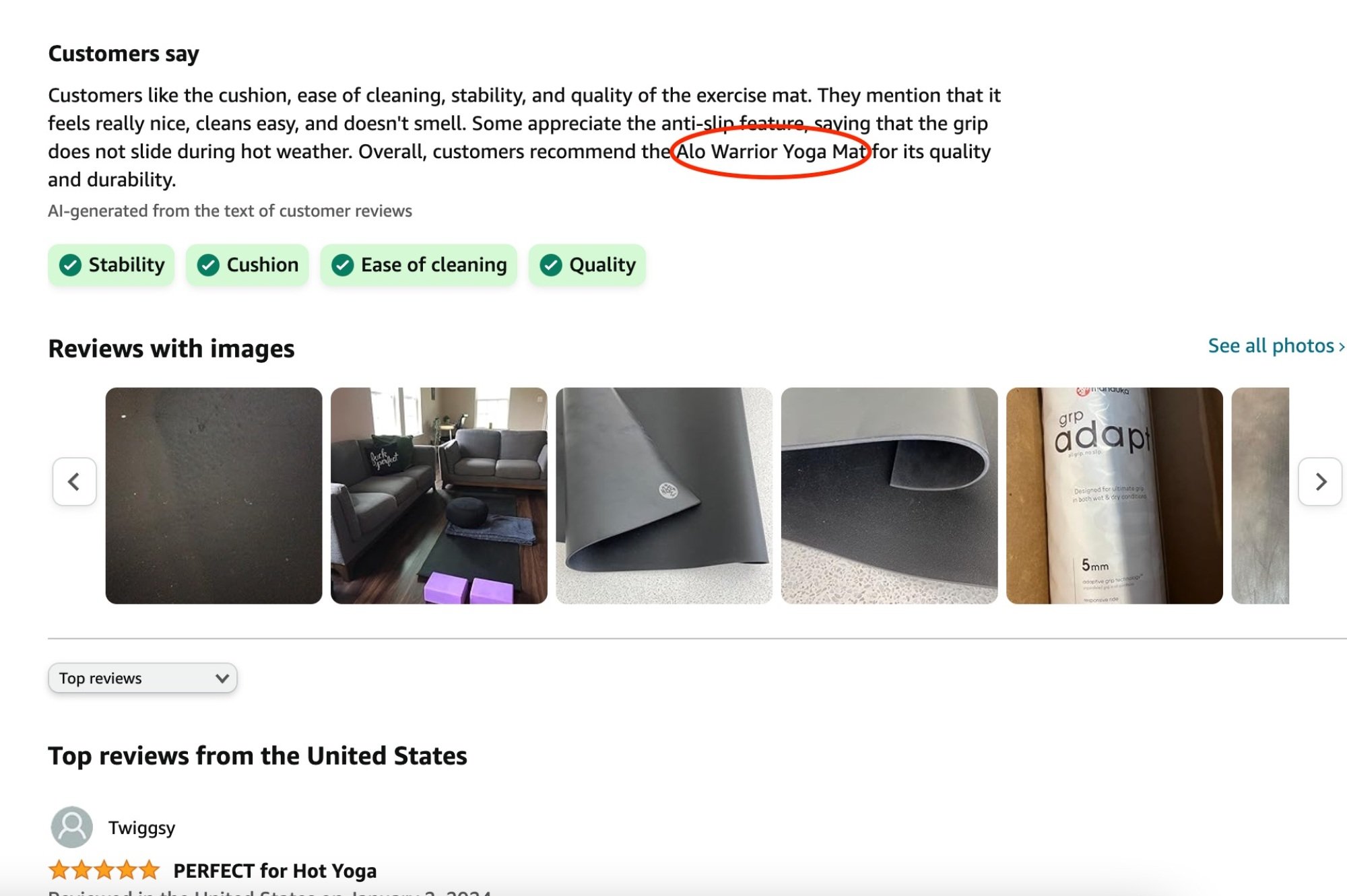

A quick search on Amazon surfaced several issues. An AI-generated summary of reviews for the Manduka GRP Adapt Hot Yoga Mat, referred to a different yoga mat by a competitor brand, calling it the “Alo Warrior Yoga Mat.” Amazon has since resolved this specific issue after Mashable brought it to their attention. But fixing individual inaccuracies in the outputs of a large language model is a bit like whac-a-mole, because not even the engineers fully understand the models’ behaviors.

And therein lies the problem with relying too heavily on generative AI. Training AI to behave autonomously also means models can “act out” in unintended or baffling ways.

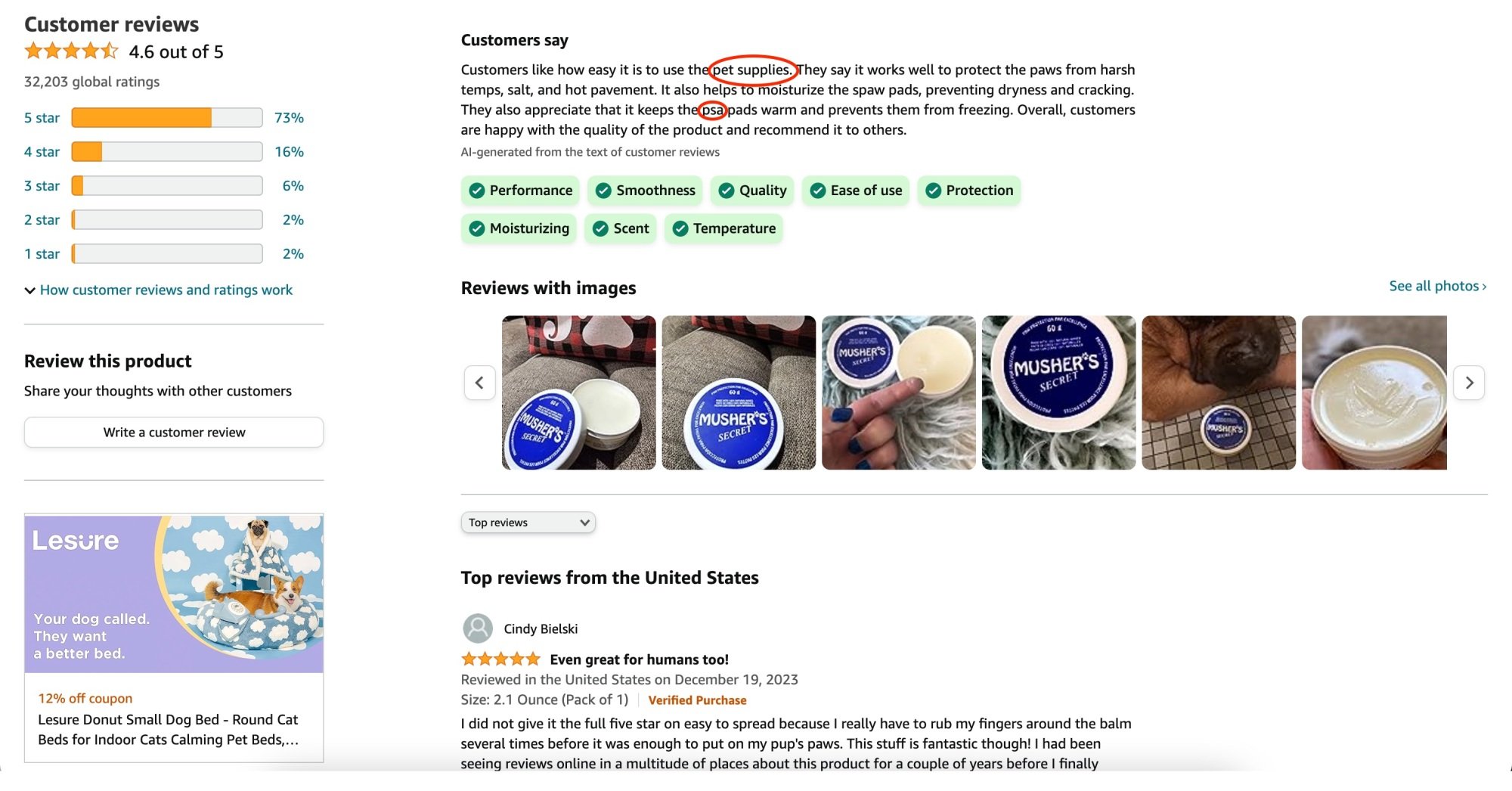

In a few other minor examples, the AI review summary for something called Musher’s Secret referred to the product vaguely as “pet supplies” and said that it keeps “the psa pads warm.” Being an ointment that protects dog’s paws from icy pavements, that’s probably meant to be “paw pads” unless “psa pads” are something we don’t know about. In terms of AI common sense, it would seem the model “learns” to write things like “psa pads” in place of “paw pads” from the idiosyncrasies of real users, which would arguably lend the AI’s outputs a certain authenticity. But is that what users should want?

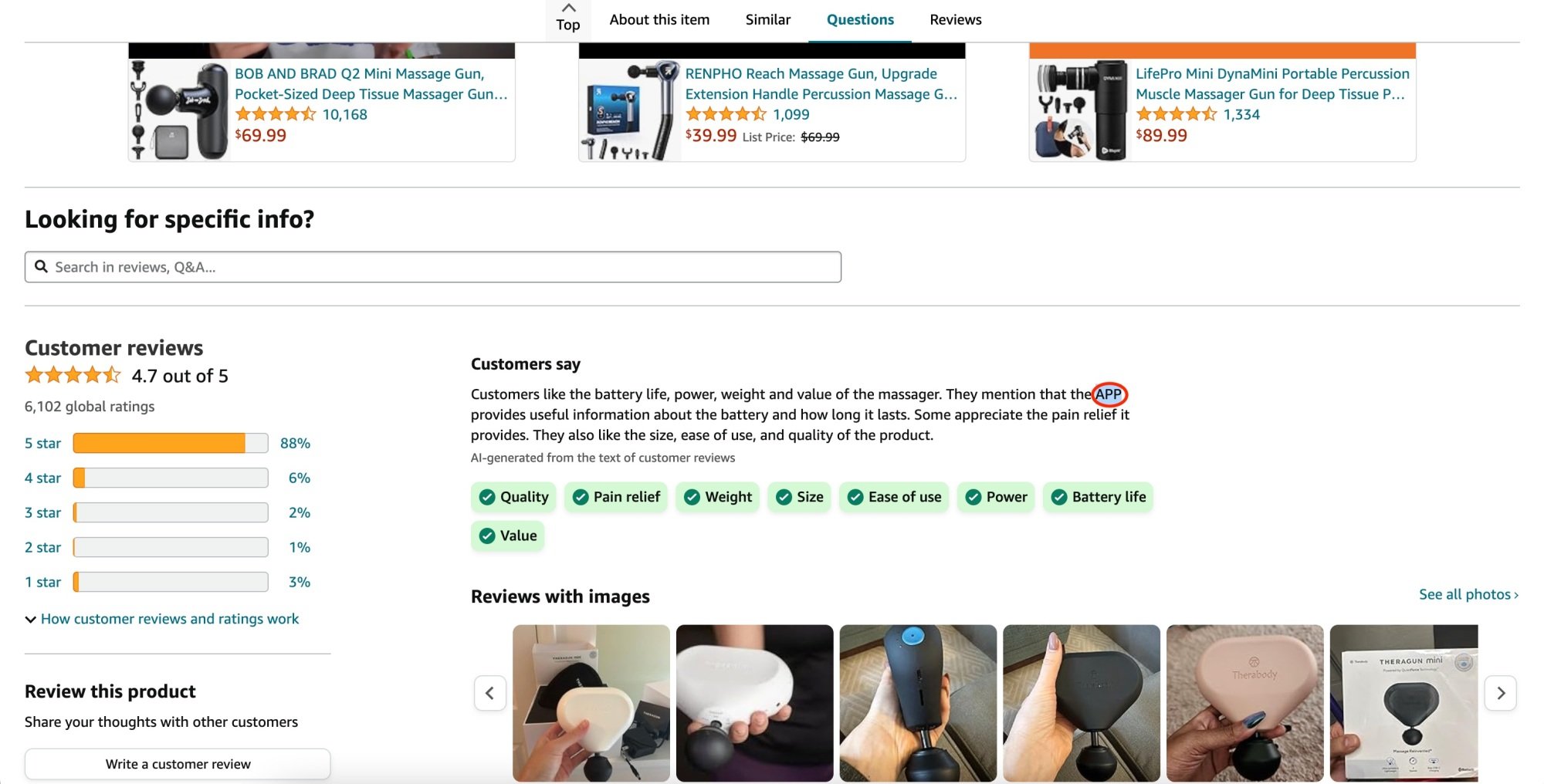

For a TheraGun mini massage gun, the review summary puts the word “app” in all caps, so it reads “they mention that the APP provides useful information about the battery and how long it lasts.” It’s reasonable to assume, the summary is talking about the accompanying app, but what if instead it was referring to a technical feature called “APP?”

Granted, these are minor errors that don’t impact the essence of the summary. Plus, human reviews contain typos all the time and that doesn’t necessarily destroy their credibility. But perhaps the bar should be higher for a non-human intelligence that hasn’t earned our trust yet. So any inaccuracy or nonsensical gibberish feels like an immediate red flag.

Still worse: if inaccuracies and hallucinations go unnoticed, these summaries — presented as more or less authoritative — could damage the reputations of products. A Bloomberg report discovered that the product review summaries exaggerate the negative aspects of reviews, which mislead consumers. The AI-generated summary of Penn tennis balls with a 4.7-star rating highlighted odor as a negative. But of the 4,300 ratings, “only seven reviews mention an odor.” Not only does this mislead customers, it might create problems for merchants.

Plus, the reviews didn’t specify the type of odor, but don’t all fresh tennis balls have that pungent rubbery smell that some people even enjoy? References to things like the smell of tennis balls seem less like the inclusion of legitimate complaints, and more like the intrusive voices of Karen-style reviewers who disproportionately give products negative reviews due to something unreasonable like packaging being hard to open.

This use of the technology also raises questions about what should and shouldn’t have AI-generated review highlights. For quality control and to ensure products don’t have AI-generated summaries of scammy reviews, Amazon only uses Verified Purchase reviews, and focuses on products that have “a minimum number of reviews,” and only in situations in which “customers share the same opinion,” said spokesperson Maria Boschetti.

Currently, Amazon does not have review highlights for books, which seems like a good thing. But it does have review highlights for medicine like Advil ibuprofen, which may not rise to the level of a potential danger, but does suggest a certain lack of caution in the rollout of this feature. Amazon says it plans to expand into more categories, so caution does not seem to be on the menu at the moment.

While it wasn’t hard to find flawed examples, customers are already finding the feature useful. A Mashable employee looking for an inexpensive tripod was able to decide by comparing the summaries of different product reviews, and picked one that had no negative feedback, compared to others that had slightly more mixed sentiments.

“Our analysis has found that review highlights are helping customers find the products they want and are leading to increased sales for sellers,” said Boschetti. “We care a lot about accuracy, and we will continually improve the review highlights experience over time.”

And let’s be honest, most people can’t or don’t want to spend the time parsing through reviews when a simple summary will suffice — as long as it’s accurate.

So should you trust these review summaries? A better question might be, are these summaries more convenient for users than the previous system? One could — very cautiously — argue that the answer to that question is “trust, but verify, by understanding the technology’s flaws and weaknesses.”